When the Product is Down the Blood Pressure is Up

If you have not gone through your first downtime, chances are your product might not have matured yet.

Stuff breaks all the time, and sometimes it breaks to the point where a tiny mistake or an unfortunate circumstance blocks your users from accessing the product altogether.

And it’s not in any way indicative of your team’s competence -unless it breaks every week, then, c’mon.

In 2019 alone AWS had 2 severe outages.

I have lived through a few of those myself. The first ones are always the scariest and are terrifying. With the users calling non-stop and the whole team sitting in cold sweats - this is the closest experience you can get to the mission-critical failure. This is the toughest test you can put on the team’s strength, on the relationship with your customers, on the value of your product itself.

But I survived, my whole team “survived” (even though we did have a member with a pre-existing who almost had a heart attack - not joking). And the craziest thing ever is that the users started loving us even more.

How did this happen?

Let’s backtrack for a second here and start from the beginning.

What is the downtime?

What I define as a “downtime” is the situation in which your users cannot use the flow of your app to get the main job done.

So, if the main “job” the users are coming to your site to do is to download a file, and everything all the views and buttons are loading, but the download is never complete - you are down, baby.

Another example, if you are let’s say Shopify and your integrated partner Oberlo starts pulling wrong prices for the inventory, it does not matter if the consumers are still able to make purchases - the main job is to “make purchases at the price that the businesses are eager to sell” will not be possible to do.

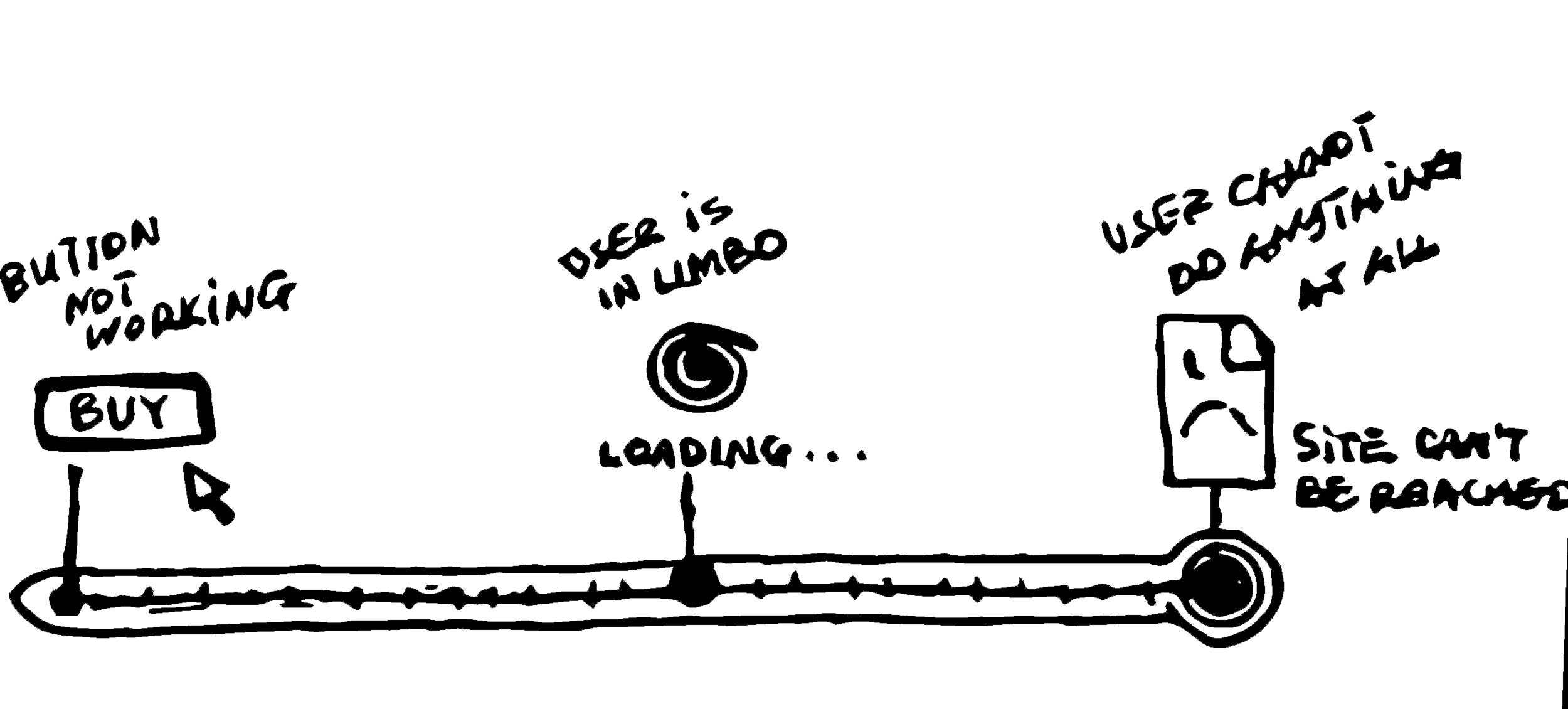

So, on a spectrum we are looking at something like this:

from a broken button that prevents the user to move to the next important step -> to a whole site being inaccessible

I was going for a scale, but this looks more like a thermometer — that also kinda works

What causes the downtime?

There can be multiple reasons, but from my experience, the 4 main categories are these:

Deploy went wrong

Server service is down

Critical bug

Attack

I’ve had the “pleasure” of being on the frontlines of the first three, and, the last one was a highly suspect but was not officially confirmed.

How do you find out?

User complaints

QA

Engineers

DevOps

If it’s a deploy went wrong - you will surely hear it from the DevOps. At that point, you have that same team (person) deployed to work on the solution right away.

Engineers might notice some bad stuff in the database or the error logs and inform the rest of the team that something is wrong. Once again, you have them working on the issue right away.

QA does its regular production run-through and stumbles upon the problem before anyone else. Now you are looking at an increased time leak because the QA has to confirm the issue on multiple browsers and devices to eliminate the testing environment, then, if confirmed, explain it to the engineering and the rest of the team. Then the engineering has to go in and confirm that the issue reported is correct.

Now - worst-case scenario - the frustrated customer calls in. First one, a second one, then all of a sudden you have dozens calling at the same time and, before anyone can understand what’s happening, you have already affected half of your daily active users!

What do you do?

debug

test

work on the resolution

estimate and blast out the message

There is no easy way out of this. What I found most useful is to completely isolate your thoughts about customers at that point and focus on finding as many facts as possible about the problem at hand. At the same time, people around you mind be inadvertently panicking and debugging the same logs as you - the result of it is normally a hot mess of everyone talking at the same time about the same issue, throwing in assumptions and trying to get their personal points across. That is a waste of time.

Before everyone starts running around like headless chickens, it is worth taking a few minutes to gather round and separate tasks.

Who is looking at the logs?

Who is doing user testing?

Who is checking different environments for clues?

The main thing that the customer-facing members would want to know, of course, is how soon will this be resolved, and the answer is almost surely “we do not know”.

What’s absolutely important is that the users know not to use the system right now to avoid any critical mistakes. Setting up a well-thought-through message and having it delivered through all the channels is critical at this time (Intercom makes it very easy). The time spent on the phone with the customers has to also be scripted so that the message does not get distorted.

How to turn this sh!tty experience around?

Here is the fan: you get the idea for the rest …

shower users with details

keep them overly updated

make it light and crack a joke if appropriate

apologize, apologize and be vulnerable

thank for patience once resolved

follow up the next day to make sure the relationship is healthy

I would not claim the bullets above to be the panacea, yet, they seemed to work pretty well in my product lifetime. When the proverbial sh!t hits the fan, your customers are the first ones to suffer, and if you see them suffer that, strangely enough, means they need you. That means you are the part of their daily routine, you are making their lives what they are now and by not allowing them to do their job, you take something away from their lives.

Now, there is a very fine line between getting your users to demonstrate how much they need you and forcing them to find your alternative. So one of the key things in the way you interact with them at that moment is by being human. I

mentioned the crafted messaging above and the scripted calls. Vulnerability, reassurance, and respect - are the main motives and tones that help show your product’s human side. I remember having a very upset customer in the early days who I was almost sure would leave. Surprisingly, he stuck around and later admitted that was because we were open to his feedback and really acted like we “cared” during the incident.

How to be prepared?

maintenance page

periodic drills and rehearsals

RUM tools

document

look back

The last thing anyone of us wants to do is practice these scenarios. It’s almost like calling for trouble. I cannot find time to pull the team out of their busy schedules to play war games, for sure. But I know I should. However, the things that anyone on the team can do is to contribute to the operational doc with the procedures described and lessons learned. Knowing that the issue can happen again, sharing stories with the new team members and being mentally ready, is really not that hard.

One of the best mechanisms one can build for their product is a way to go into the shut-down mode as quickly and as effortlessly as a switch of a statement. I would not go into the technicality of the implementation, but the result is amazing. Knowing that there is a safety parachute that the engineers can open any time there is some evident risk - makes it much easier to deal with. Monitoring tools like New Relic and SmartBear are also irreplaceable (here is a list of best RUMs on the market 2020).

Finally, a simple thing like having a solid maintenance page cannot be overlooked.

As a product person deeply involved in the development side of things, you have to deal with these situations more often than desired. I have to admit that, even though it’s insanely stressful, if handled right, it does result in better bonding with the team, the product, and the customers.